I’m experimenting with what is hopefully a simpler and more readable blog layout. Sans-serif font: yay or nay?

Category: Uncategorized

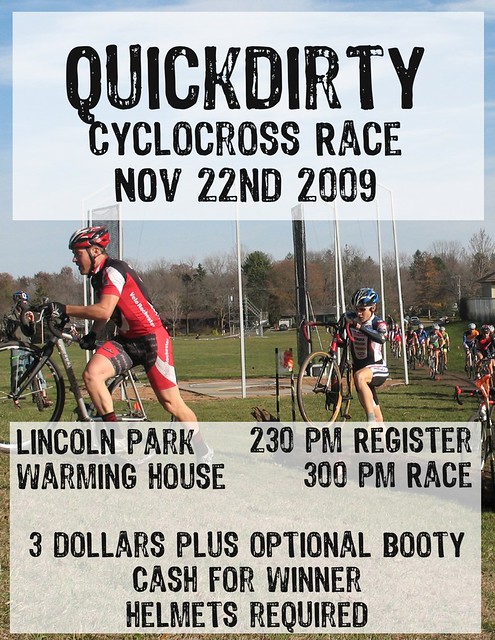

[portfolio] QUICKDIRTY race flyer

My ongoing battle with GeoMOOSE 2.0.1 un Ubuntu 9.04

SOLVED! Skip to the bottom if you want the answers.

This past week I attended several presentations at the North Dakota GIS Users Conference in Grand Forks. The last workshop I was able to see was a general overview of setting up a web-based mapserver. There are several reasons for wanting to do this, but mostly it’s a way to share data with other people without having to move entire datasets to everyone who wants to view them.

I was most interested in the open-source web application GeoMOOSE, which allows users to set up web-based mapservers using pretty much whatever kinds of data layers are available (Google Maps, shapefiles, etc.). When I finally got around to trying this out for myself last night, I ran into problems right off the bat. Hopefully I can explain some of the things that went wrong for me and help someone out, especially if I can figure out just how to make this thing work (and I have a project in mind that would be perfect).

I tried to install first on my Powerbook G4, since I’ve been able to run various Linux/Unix programs on it before and web applications shouldn’t actually require an automated installer (I’m comfortable editing textual configuration files). I had no luck, potentially because I was trying to follow the online instructions for Unix and my Apache installation isn’t set up in quite the same way.

This morning I’m back into it on my Ubuntu Linux server (9.04 Jaunty Jackalope server) and trying to install everything exactly as it was explained. I installed MapServer (which I think is required and not just suggested for GeoMOOSE 2.0.1) via apt-get install:

sudo apt-get install cgi-mapserver mapserver-bin mapserver-doc php5-mapscript python-mapscript

It seems to work, because I can go to the MapServer URL (http://localhost/cgi-bin/mapserv) and get a message (“No query information to decode…”) that matches the one described on this page (step 8)/. Note that /cgi-bin/ is not in your web directory (in my case, /var/www/) but in /usr/lib/cgi-bin and then Apache aliased to be at the MapServer URL I have above. I have not tried to install the tutorial/demo, but that is next on my list of things to do.

Next, I “installed” GeoMOOSE according to the online instructions for Unix. A few notes:

- Don’t unpackage the files to your web directory. They don’t need to go there because the configuration script will put files in other places that are actually the ones being used, and keeping those files in your web directory may be confusing. I know it was for me.

- Files will be installed to /opt/geomoose2/ and you will be told to add a line to your Apache httpd.conf file (in Apache2 this is /etc/apache2/apache2.conf). This line references a GeoMOOSE file that sets Apache aliases (shortcuts) from your web directory to /opt/geomoose/htdocs. The line I had to use was

Include /opt/geomoose2/geomoose2_httpd.conf

even though the instructions are missing the “2” on the directory name. I only found this out because I tried to restart Apache and it threw an error.

- What the geomoose2_httpd.conf file does is set up Apache aliases from your web directory to the actual GeoMOOSE files in /opt/geomoose2/htdocs. Remember, these aliases will not show up in your directory structure but they will work if you go to the URL with your browser, such as http://localhost/geomoose2/geomoose.html. I’ve no idea why the filename is geomoose2.html rather than index.html as it seems to have been in previous versions.

This, unfortunately, is where I stand. I can access what looks like the GeoMOOSE page via the URL above, but it doesn’t load maps or throw errors. I’m not sure it even sees MapServer, even though I checked the /opt/geomoose2/conf/settings.ini file to make sure the path was correct to MapServer.

I am still trying to figure out a solution and will be sure to post if I do.

UPDATE 11:26 AM.

The MapServer tutorial seems to work, as far as I’ve taken it. Even thought the site claims that all the URLs are designed for Linux/Unix, that is incorrect, so first I had to change the URLs I was using and THEN rearrange the data structure of the tutorial files (downloaded as a zip) in order to get everything displaying correctly. In any case, MapServer seems to know how to display things.

UPDATE 2:27 PM.

Success! First, I emailed the geomoose-users list for some help. The first reply reminded me that during the GIS presentation the Firefox addon Firebug had been mentioned. This addon allows you to see what data are going to and from your browser when you try to load a page.

I downloaded Firebug and immediately got to see (from the Console tab) that my “dynamically loaded extensions aren’t enabled…” After doing some looking online, I edited php.ini to set “enable_dl=On” where it had been “enable_dl=Off” before, then restarted Apache.

No maps yet, but another error: “unable to load dynamic library ‘/usr/lib/php5/20060613+lfs/php_dbase.so'” which was essentially saying that I was missing another library. Long story short, this is what I did, although I had to download the complete source from PHP.net rather than apt-getting it because the dbase directory was mysteriously missing. This included using apt-get to install beforehand the php5-dev package to run phpize. Note: even though my current PHP version is 5.2.6, I downloaded the source for 5.2.11 and everything seems to work okay (for one of the commentors here too).

Right around the time I finished up I got another helpful email–which showed that I was on the right track. I’m just glad I didn’t have to compile PHP from source!

You can see the active map (just the original demo at this point) at http://www.protichnoctem.com/geomoose2/geomoose.html.

[activity] 2009 North Dakota GIS Users Conference

I attended the 2009 North Dakota GIS Users Conference on November 2-4 but did not present. Saw a lot of good talks, notably on working with LiDAR data, serving online maps, and cross-border data harmonization.

2009 North Dakota GIS Users Conference

I attended the 2009 North Dakota GIS Users Conference on November 2-4 but did not present. Saw a lot of good talks, notably on working with LiDAR data, serving online maps, and cross-border data harmonization.

Renting PDFs: Commentary

I’ve taken the time this morning to read the post at The Scholarly Kitchen on DeepDyve and the idea of renting access to scholarly articles. I haven’t played with the DeepDyve site at all yet, but some comments show a less-than-promising access model at present.

What is most attractive about this model is that it does act as iTunes or Netflix in the most fundamental way: you have one site that you go to, search, and download (well, view) from for all of the publishers who are involved. One username, one password, one bank account, and a way to see how much you’re spending on articles every week/month/year.

The $0.99 pricepoint is interesting, and I’m not sure how feasible that is in the long run: the issue of JVP in front of me has 32 discrete “articles,” so if this is about average I’ve been getting ~128 articles per year for my $75 student dues, which comes out to ~$0.59 an article, making it more economical to just become an SVP member and get all the extras that come with that as well. This is great, but I know that the majority of the time what I do with JVP is skim through the volume to see if there is anything interesting, read the one or two most interesting articles in more depth, and put it on a shelf (keep in mind that my main study area is not vertebrate paleontology). If I could skim the whole volume (view all text, figures, and tables) but only download those articles I wanted to read and keep, it would save me money.

Unfortunately this is not currently available, but then again I’m not sure how many people “read” journals like I do. If the DeepDyve model allowed people to freely view a certain number of articles per month without downloading them, would it have more success? How many people want to download, print out, and keep a copy of the work they are citing, rather than reading through the article to find the information they need, writing it down, citing it, and never looking at the paper again? I’ve found that a lot of the information I need is scattered throughout papers, especially historical ones, making this sort of “tactical strike” methodology impossible if I want to see the whole picture.

I’ve always been one to look through whatever volume through which I’m looking to find the article I need out of curiosity’s sake, just in case a related (or otherwise interesting) article can be copied/scanned/downloaded at that time without too much more effort. From conversations I’ve had over the last few years, I’ve realized that I’m not the only one to do this, and that it becomes even more important when looking at older historical works which may have been collaborations split into separate articles within the volume. At least one individual structures his own PDF library according to journal-volume rather than author-date in order to preserve the original collections. (PDF library/reprint structure deserves its own post.)

All in all, I’m not sure how a rental model will decrease piracy of scholarly articles (that sounds amazingly nerdy!). Already, Jill Emery commented that she tried to screenshot a DeepDyve “rental” and it worked, although you only get half a page at a time. If the article were worthwhile, I’d probably take the 10 minutes to screenshot and build a quick and dirty PDF–and I doubt I’m alone here. Piracy gets you a whole article to keep forever and is free; renting costs money and is more difficult to read, even if it’s easier to get. If you don’t have access to a scholarly pirating network (aarr!) and can’t wait a few days (e.g., to ask around on listservs), the DeepDyve model will probably work out, but I’m not so sure about paleontologists–we’re used to deep time.

Buying PDFs: Commentary

This post was originally a comment on Andy’s post “Buying PDFs: Truth and Consequences” at The Open Paleontologist blog. The text grew too long, so I’m devoting a full post to it, even though it’s a bit rough. The topic is how much we pay for PDFs of published articles, and why this is so disproportionate to physical copies.

People who know me already know what my suggested “solution” is, which is to share as many PDFs with as many people as possible in order to help the publishers reevaluate their prices, however…legality prevents me from supporting taking such action. This is modeled after the philosophy of Downhill Battle: in order to get radio stations to play music beyond the mainstream (paid for by the record companies), we need to bankrupt the record companies, essentially by quitting buying music, or at least music produced by the largest companies who pay the biggest bucks toward keeping their music on the air.

I’m not sure if Andy has a citation for his observation that publishers like Elsevier that continue “to post profits in the midst of the recession”? Having someone play with those numbers a bit would be interesting to do.

This ends up being like gas prices. I get that as a business you get to set your prices as the market will bear, but the strategy of moving more merchandise rather than more expensive merchandise should always be something to consider. How much research do these publishers do as far as sub-fields go? As you say, hospitals can pay top dollar for a single article, but more paleontologists will buy an article if it’s cheaper (especially if they are unaffiliated), will be able to do the research they want, and will be looking for a place to publish.

On that note, I hope people continue to vote with their feet when it comes to open-access vs. closed-access, or even if some journals have slightly lower per-PDF fees. I’ve had the discussion recently about what “high impact” means anymore: nothing. It used to mean that the physical journal was available in more libraries and hence better-read and better-cited, but since everything goes to PDF now, everything (new) is equally available to someone who can do a halfway decent job of searching. This gives us all the freedom to publish in journals with whose practices we agree, rather than who has a wider physical distribution.

Ichnowiki: Anomoepus

[EDIT: Ichnowiki is no more. 2014-02-07]

This weekend I’m getting back to working on Ichnowiki. The first article I found on my desktop was Milner et al. (2009) from PLoS ONE, so I started off with Anomoepus. If anyone else is interested in tracking down references and describing this taxon, by all means jump in!

References:

Milner, Andrew R. C., Harris, Jerald D., Lockley, Martin J., Kirkland, James I. and Matthews, Neffra A. 2009. Bird-like anatomy, posture, and behavior revealed by an early Jurassic theropod dinosaur resting trace. PLoS ONE 4(3): e4591. doi:10.1371/journal.pone.0004591.

Meta: Changes to Protichnoctem

I’ve recently gone through my old posts and removed those that no longer fit what I think this blog should be. I’ve had the most satisfaction with content-heavy posts that either describe how to do something (with computers, usually) or discuss something I’ve just learned and I think could be interesting to other people.

I’ve left some of the lighter posts because they are related to geology/paleontology/science in some way, and I’ve left some of the geopolitical or economic posts because I still generally agree with what I took the time to write down. In the future, I hope to do more research-oriented blogging.

Although I’ve had this blog address since fall 2004, I’m considering moving everything to my server just to consolidate my online presence. The address is www.protichnoctem.com. Now that I’ve cut down on the number of posts here, this may be possible with a minimal amount of work.

Improved Blockquotes

I just updated the way blockquotes are displayed on this blog. I plan on improving it in other ways, but lunchtime is just about over.

This is how I did it, via this list of cool CSS techniques I never bothered to learn.